Applying the Tolman-Eichenbaum Machine to Generalization Tasks in Autonomous Driving

Components To A WorldMap Model For Autonomous Driving Agents

Day 138 at Chez Doritos.

One of the most remarkable abilities of the mammalian brain is its capacity to generate flexible behavior that adapts across different contexts.

For example, let's imagine you spent months learning how to drive a car in your hometown. You study the rules, learn the dimensions of the car, and familiarize yourself with the streets — you know the layout of intersections, traffic patterns, and local driving customs.

Note to self. A phatic finger is a public gesture carrying shared meaning on the highways of the Australian Outback.

You travel to a new city you've never visited before. Even though you've never driven on these particular streets, you are able to navigate and drive effectively.

But how is that possible?

If you think about it, you have never been in that particular situation, so this must be a totally new problem, right? Most traditional robot trajectory learning methods using video-based training would indicate so. In reality though, for us meat bags, the answer lies in the ability of our brain to generalize. We are able to strip away the particular sensory context of your hometown streets and extract the abstract notion of general driving procedures.

At the same time, we also know the general principles of how roads and road rules work, so one quick assessment of the traffic patterns and signs is enough to relate that particular layout to your inner model of driving.

Unless you are driving in India, I suppose. That’s not a dunk on India. I sat on the board of an India RBI-regulated NBFC for a while and could enjoy this frequently.

Although I was not allowed to drive in India — for obvious reasons, I am able to drive in many parts of the world. How?

The Cognitive Map

Our ability to generalize on driving patterns requires information about the world to be organized into a multi-purpose coherent framework. Also known as a cognitive map.

His name was Edward C. Tolman

Let's run an experiment. You are working on an autonomous car. You drive the car to a test ground and train it. Every time the car finds its way to the goal the driving agent gets a reward. After a few successful trials, you drive the car in a new test-ground. Normally, the car would try to run to the familiar path, but what would happen if it finds it blocked? Now the model is faced with a decision.

Which alternative path to pursue instead?

Let's think about how to approach this decision-making task.

One way is having the car learn by associations which, similar to real life, means that they would take a path that was most similar to the one that originally led to the reward. If, however, our car had some sort of internal map of the spatial layout, they would choose a path that traversed in the direction of reward although this way had never been experienced before, so it's not directly associated with reward.

Experiments like these, but with life animals, were conducted in the 1930s by Edward Tolman an American psychologist. He also coined the term "Cognitive map" relating to the idea that animals have something like a mental map of their surrounding space. While his research was impactful, it took 40 years until neuroscientists were able to see how such a map is manifested in the neural activity of the brain.

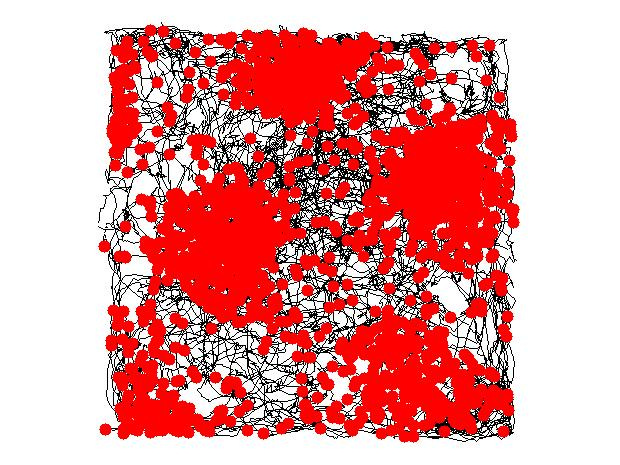

Source: Trajectory of a rat through a square environment is shown in black. Red dots indicate locations at which a particular entorhinal grid cell fired.

Neurons in Hippocampal Formation

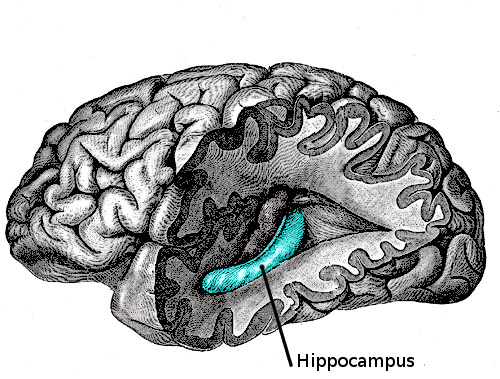

Although we know now that cognitive maps serve as general-purpose representations of the world, historically, they were usually studied only in the context of spatial behavior. Spatial behavior refers to how humans and animals navigate, interact with, and use space in their environment. The predominant view is that the workhorse of cognitive mapping in the mammalian brain is the Hippocampal formation which includes the hippocampus and the entorhinal cortex which serves as a gateway through which information flows in and out of the hippocampus.

The hippocampus itself consists of several specialized spatially selective neurons and their relationship to how knowledge is organized into a cognitive map at the level of single cells.

Components of a Tolman-Eichenbaum Machine

Let's briefly review the major wet-ware components of the hippocampus and entorhinal cortex: