Exploring Chain, Buffer, Tree of Thought, and ReAct for Cognitive Agents

An exploration into reasoning techniques for your cognitive agent.

OpenAI’s new “o1” model has recently made some moderate waves in the community. There is a lingering discussion on reasoning and artificial general intelligence, and I believe to be in the unique spot to chime in on this discussion.

In this post, I will explain my thoughts on the matter by providing an exploration of the following modern reasoning approaches.

Chain of Thought

Buffer of Thought

Tree of Thought

Reasoning/Acting

For my paying subscribers, I have added links to the datasets needed to train LLMs in these techniques after the paywall below.

What is a thought?

For us humans, we refer to the concept of “thought” mostly as a conscious act where we become aware of a certain fact, theme, or idea.

“I just thought of you”, “This thought just popped into my head”, or “Weird thought, but what if we would approach the problem like this” are uses in the English language that might illustrate this concept well. Therefore, for us humans, the term “thought” is closely connected to the terms awareness and consciousness.

Of course, for our cognitive agents, we can’t make the same deduction as their thought is merely a statistical approximation of previously learned facts and relationships.

So when my Re/Act-based cognitive agent Matt, “thinks” about a logical problem, he appears to base his reasoning on an inner monologue sourced by statistical approximation rather than a true sign of conscious awareness.

Over the last few months, I hope to have shown that reasoning is a powerful way to structure the inference of a cognitive agent’s “thoughts” to solve a specific goal. OpenAI now launched a new model that by chaining several of these simple thoughts, can reason towards a complex logical conclusion. And their results are truly impressive.

Cognitive agents mimic human reasoning by recognizing patterns, drawing connections, and applying learned knowledge to new situations. These patterns are learned through specifically prepared datasets and are executed as a multi-step process.

Starting with the same technique OpenAI’s used, I will explain how these reasoning techniques work, and how you can use them to get similar results.

Chain of Thought (CoT)

Chain of thought is a prompting technique that guides language models to generate step-by-step explanations by chaining simpler, intermediate logical deductions towards a more complex reasoning construct. By doing so, chain of thought prompting enables cognitive models to tackle intricate tasks more effectively and produce more transparent, interpretable outputs.

Provided with the right training data, the technique works by explicitly asking the cognitive agent to show its work or explain its reasoning as it progresses towards a solution. This step-by-step breakdown not only improves the accuracy of the final answer but also provides insight into the model's decision-making process.

Chain of thought prompting is particularly valuable in areas such as mathematics, logic, and complex problem-solving, where the path to the solution is as important as the solution itself.

One of the key advantages of this technique is its ability to enhance the reliability and verifiability of AI-generated responses. By exposing the intermediate steps, it becomes easier to identify errors, logical flaws, or alternative approaches. This transparency also facilitates better human-AI interactions, as users can more readily understand and evaluate the agent’s reasoning.

The disadvantage is that the chain of thought explores one specific path towards a solution and will fail if it has not been trained for this use-case.

Buffer of Thoughts (BoT)

Buffer of thoughts is more than a mere prompting technique that expands upon the chain of thought approach. BoT introduces meta-buffer’s and thought templates to enhance the logical multi-step problem-solving capabilities of cognitive agents.

The Buffer of thoughts technique involves maintaining a "buffer" or temporary storage of intermediate thoughts and ideas generated during the reasoning process.

Here, the agent is prompted to generate and store multiple potential paths or ideas related to solving a problem, rather than committing to a single line of reasoning immediately. This buffer acts as a working memory, allowing the model to explore various angles, hold onto potentially useful information, and dynamically adjust its thinking as it progresses through a task.

Key aspects of the buffer of thoughts technique include:

Generation of multiple ideas: The model is encouraged to produce several potential approaches or relevant pieces of information.

Storage and retrieval: These thoughts are temporarily stored and can be accessed or referenced as needed throughout the problem-solving process.

Dynamic updating: The buffer can be updated as new information becomes available or as the model's understanding of the problem evolves.

Selective focus: The model can choose which thoughts in the buffer are most relevant or promising to pursue further.

Iterative refinement: The approach allows for iterative improvement of ideas, with the ability to revisit and refine earlier thoughts.

This technique can be particularly effective for complex tasks that require creativity, lateral thinking, or the integration of multiple concepts. By maintaining a buffer of thoughts, the model can potentially avoid getting stuck in suboptimal reasoning paths and may discover novel connections or solutions that might be missed in a more linear thinking process.

Tree of Thoughts (ToT)

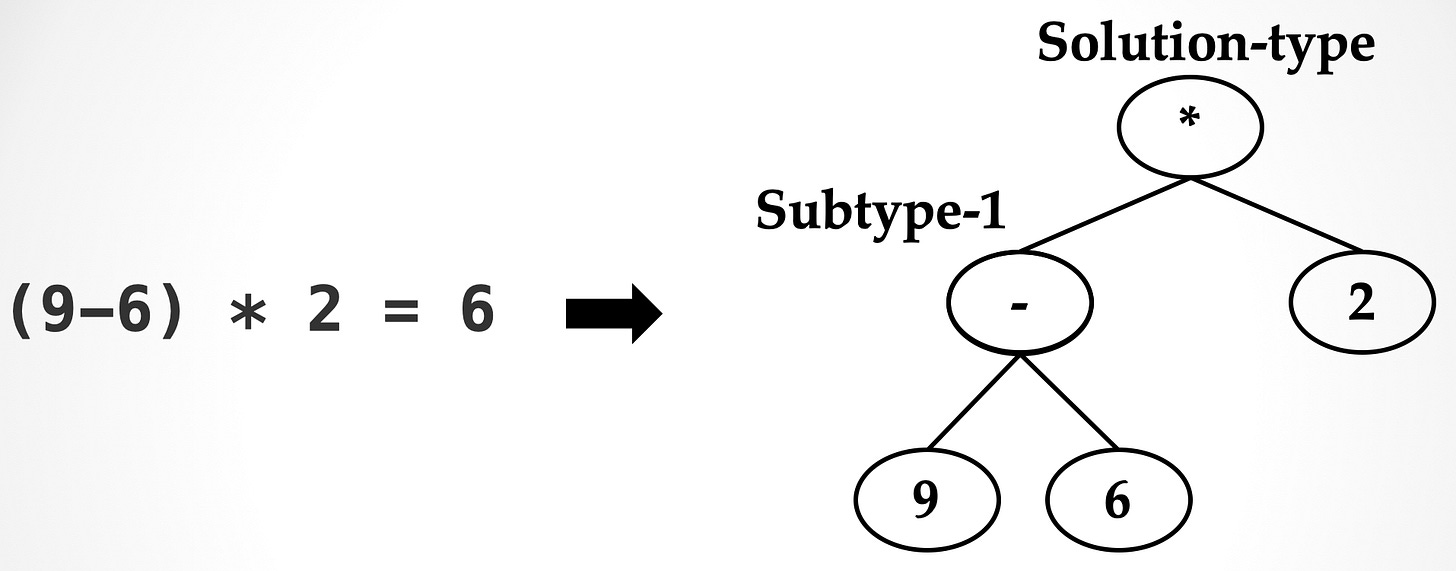

Tree of thoughts is an advanced prompting technique that extends the concepts of chain of thought into a more structured, hierarchical approach to problem-solving. ToT gneralizes over the chain of thoughts prompting approach and enables reasoning exploration over coherent units of text (“thoughts”) that serve as intermediate steps toward problem solving.

The ToT method organizes the reasoning process into a tree-like structure, allowing for parallel exploration of multiple solution paths.

Key features of the tree of thoughts approach include:

Branching structure: The problem-solving process is visualized as a tree, where each node represents a thought or decision point, and branches represent different potential paths or strategies.

Parallel exploration: Multiple solution paths can be pursued simultaneously, rather than following a single linear chain of reasoning.

Depth and breadth: The technique allows for both deep dives into specific lines of thought (depth-first search) and broad exploration of multiple alternatives (breadth-first search).

Evaluation and pruning: Less promising branches can be "pruned" or discarded, focusing computational resources on more viable solutions.

Backtracking: The model can return to earlier decision points and explore alternative branches if a particular path proves unfruitful.

Hierarchical organization: Thoughts are organized in a hierarchical manner, with high-level strategies branching into more specific sub-strategies or steps.

In my opinion, ToT is particularly useful for complex problems that have multiple potential solutions or require considering various scenarios. It allows the cognitive agent model to systematically explore a wide solution space, compare different approaches, and potentially arrive at more innovative or comprehensive solutions.

Tree of thoughts can be especially effective in tasks such as strategic planning, creative problem-solving, and decision-making under uncertainty, where considering multiple alternatives and their potential outcomes is crucial.

Reasoning/Acting

When I began building in the cognitive agent space, I was immediately hooked on the concept of Reasoning/Acting agents. Especially because in addition to structured reasoning, they had the ability to use tools, especially the “human” tool allowing the agent to ask questions. So when working with Matt specifically my main concern was solving a parameters optimization or fine-tuning problem. I used this type of model because I was looking for a method where I can monitor the actual thought process of the model to identify areas where the model is lacking and might need improvement.

The benefit of ReAct reasoning especially in its Langchain applications is that we can monitor their “thought” process and thus can understand how the model uses its own internal representations to generate thoughts and how this progress is grounded in insights found in the training data.

At its core, ReAct integrates language-based reasoning with the ability to take action in an environment. The agent alternates between reasoning about its current state and situation, and then taking actions based on that reasoning. I specifically liked this approach because it is designed to handle complex, multi-step tasks that require both thinking and doing. I.e., action and observation are incorporated into the reasoning process. This makes the agent’s reasoning applicable to a wide range of tasks, from question-answering to interactive problem-solving.

Conclusion

If all, I would think that the new model by OpenAI shows several things. Benefits from training LLMs have slowed down and industry leaders are now looking into new architectures to gain a lead. We are still far away from a true reasoning model that helps us humans work through a complex problem as a true counterparty in the thought process. Even worse, OpenAI does not disclose the “thought” process to the user. Due to the increased engagement with the model in its step-by-step reasoning process cost for using the model increases substantially. This is not that surprising, but one of the many reasons why I am pushing for high-quality local agents when building my models.

Whether this new model will gain adoption depends if we can use it in the same way. A lot of use ChatGPT or clause similar to information engines that summarizes interesting facts in new and surprising way. I think we should use these reasoning systems as naive but intelligent new co-workers. Provide it with the right data, give it a task, and let it surprise you with its output.

Paywall