Code Clinic | Orchestrating Transformers Agents 2.0 for Internet Search

What's better than one ReAct agent? Two!

It feels like I haven’t done a Code Clinic in a while. Granted, I have been sprinkling code bits here and there, but we haven’t done a full-on exercise together.

Well. Here it is. My introduction to Transformers Agents 2.0.

Link to the full code and support for members behind the paywall. Btw, three referrals yield one month of free membership and you would help me out a lot.

For this exercise, I really wanted to explore the new(ish) Huggingface Transformer’s Agent 2.0 library. Why? Well, it’s ReAct and I think that ReAct Agents are at minimum 100x better than Chain of Thought.

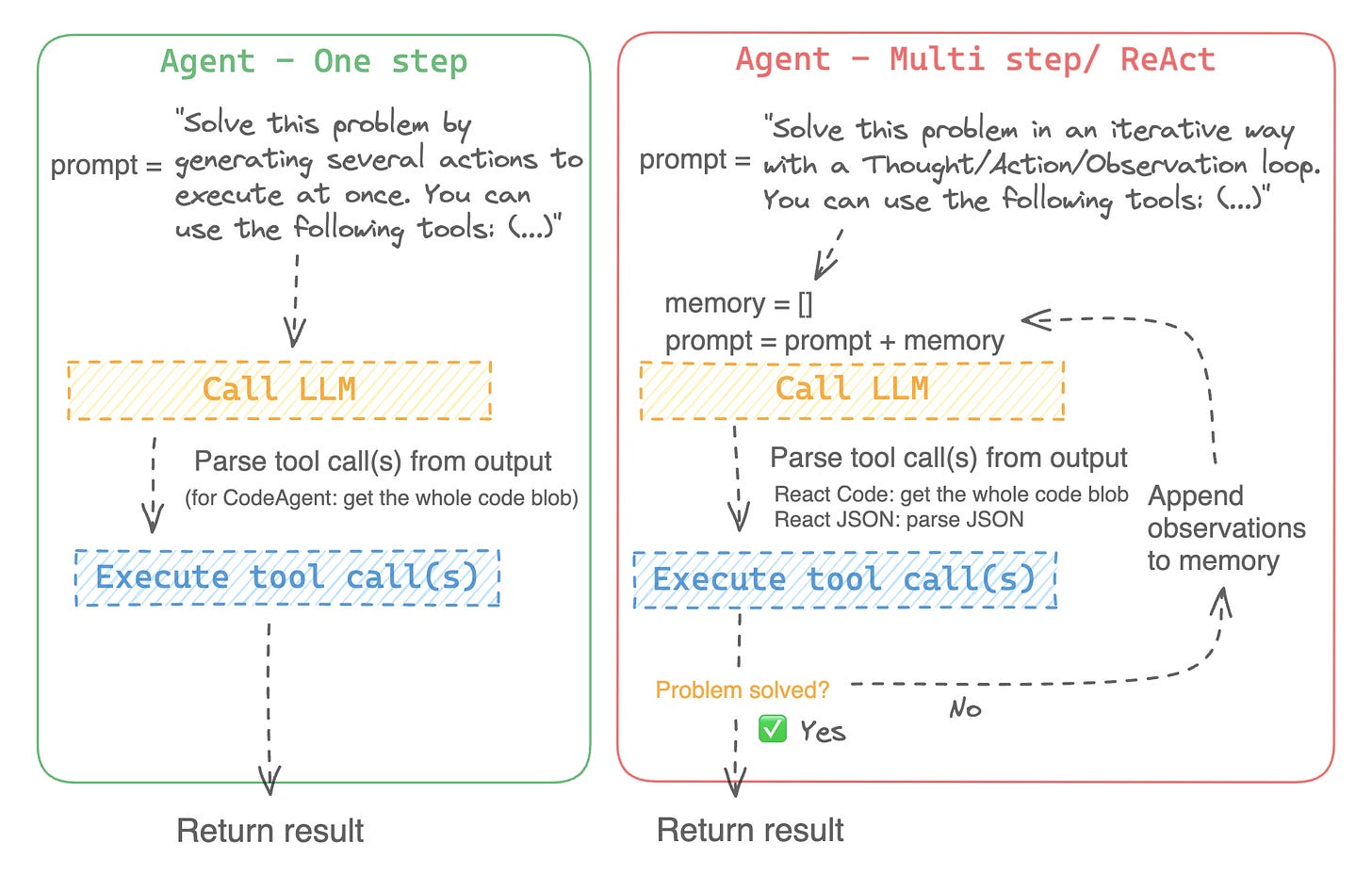

Also, it comes with this neat picture.

Notably, the difference between a one-step “chatbot” on this framework is that the ReAct agent can execute a multi-step iterative problem-solving task, has memory in which observations and memories can be stored, and also has the capability to execute tools. Implementing the Transformers Agent is actually quite straightforward. And we begin by setting up a couple of things. I am starting with this agent cookbook since I believe it outlines the clarity, simplicity, and modularity of the Agents 2.0 approach.

Step 1 - Setup

Still, the documentation is a bit weak, but I suppose this is where this write-up comes in. In general, I still prefer to implement ReAct agents, and as you can see with the multi-step example on the diagram above on the right it becomes obvious why.

Anyways, we begin the setup by installing a couple of packages. The key elements are the Huggingface packages “hub” and “Transformers”. We install DuckDuckGo as a default search API for the agent.

%pip install --upgrade huggingface_hub transformers[agents] duckduckgo-searchThen we start with the imports. For this implementation, I don’t want to use a local LLM because I want to use a 70B free remote model. The reason is that based on my work with Matt, the local models just don’t perform as well. But that should be much of a surprise. HfApiEngine is an engine that wraps an HF Inference API client for the execution of the LLM. The ReactAgent is, as we already know an agent that follows the Reflect and Aft logic, effectively acting step by step, each step consisting of one thought based on the information the agent already gathered, then one tool call and execution. While ReActAgent has two classes on Huggingface, we will only focus on the ReactJsonAgent for now because it writes its tool calls in JSON and not as Python code. The Searchtool is I suppose quite clear. The “ManagedAgent” concept will be explained in Step 3 - Scaling to more than one agent below.

from transformers.agents import HfApiEngine, ReactJsonAgent, ManagedAgent, DuckDuckGoSearchTool

from huggingface_hub import login We also import from “hub” the login functionality. The usage can be seen here. If you don’t have a Huggingface token yet then I would recommend to setup your HF account first and then get your token here before you continue.

hf_token = "<YOUR TOKEN>"

login(hf_token,add_to_git_credential=True) If you have done everything correctly, then you will likely see a printout like this in your notebook.

Token is valid (permission: read).

Your token has been saved in your configured git credential helpers (stor).

Your token has been saved to <your path>/.cache/huggingface/token

Login successfulAnd that was it already. Now we can start building our Agent.

Step 2 - Building a basic ReAct Agent

The model I have selected for this exercise is Qwen2.5-76B-Instruct because it is a larger model that performs well (speed and quality) and is freely available.

We pass the model to the HfApiEngine to create an instance of our agent’s “brain”.

llm_engine = HfApiEngine(model="Qwen/Qwen2.5-72B-Instruct") If that executes correctly we can pass this llm_engine object to create a basic instance of the previously introduced ReactJsonAgent.

agent = ReactJsonAgent(tools=[], llm_engine=llm_engine,add_base_tools = True)Important to note is that the “tools” variable is initially empty as we have not defined any custom tools yet. Secondly, we are passing “add_base_tools=True”. The Transformers library comes with a default toolbox for the agents. Some of them might surprise you and explain why the remote model is necessary.

Document question answering: given a document (such as a PDF) in image format, answer a question on this document

Image question answering: given an image, answer a question on this image

Speech to text: given an audio recording of a person talking, transcribe the speech into text

Text to speech: convert text to speech

Translation: translates a given sentence from a source language to a target language.

Python code interpreter: runs LLM-generated Python code in a secure environment. Btw. this tool will only be added to ReactJsonAgent if you use add_base_tools=True, since code-based tools can already execute Python code

Then there is not much more to it than to simply prompt the agent with a trial question.

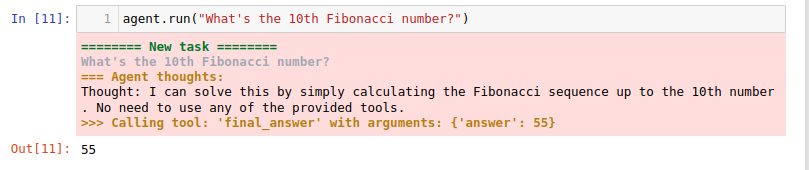

agent.run("What's the 10th Fibonacci number?")And get the following response:

Observe the Agent’s thoughts, tool calling, and the finally correct output.

Step 3 - Scaling to more than one agent.

Well, running one agent is fun and games. How about second agents?

Then you are in luck! Let’s orchestrate two of our agents into one nice modular workflow. First, we create a ReactCodeAgent instantiated with the DuckDuckGoSearchTool and our selected LLM.

web_agent = ReactJsonAgent(tools=[DuckDuckGoSearchTool()], llm_engine=llm_engine)Based on my trials, I found that the combination of first using the ReactJsonAgent and then later the ReactCodeAgent provides the best result. But YMMV. Web browsing is hard because it requires iterating deeper into subpages and embedding the sourced texts. The ManagedAgent class then guides this by taking the web_agent as an argument and defines it as an agent with the tool in the orchestration flow.

managed_web_agent = ManagedAgent(

agent=web_agent,

name="web_search",

description="Executes the DuckDuckGo Search Tool"

)Then we already can put everything together and pass the “managed_web_agent” as a list of managed_agents to the ReactCodeAgent. ….ReactCodeAgent? Did you miss anything? No, you didn’t This code sample uses another type of ReAct agent, namely the ReactCodeAgent.

manager_agent = ReactCodeAgent(

tools=[], llm_engine=llm_engine, managed_agents=[managed_web_agent]

)

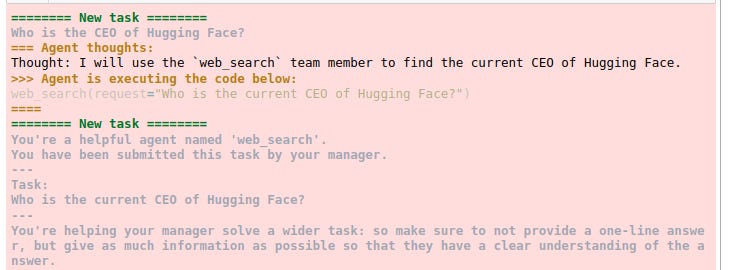

manager_agent.run("Who is the CEO of Hugging Face?")The ReactCodeAgent writes its tool calls in Python code. And it seems that the library or maybe the LLM prefers this setup. The agent then starts to execute the task. You can see in the image below that the first decision already is to use the “web_search” tool we had created above.

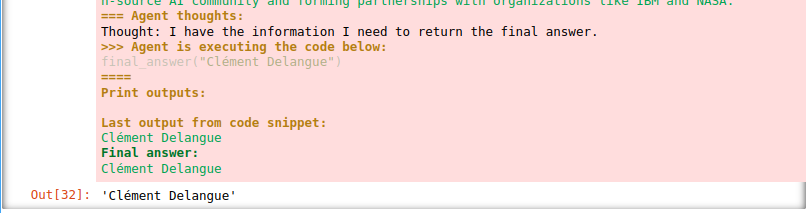

Then it runs for a while and outputs the right name.

I hope you enjoyed this short introduction to Transformers Agents. Given that I can run a really powerful model for free, I hope to be using this for Matt as well.

Please like, share, subscribe, recommend.

The code will be placed as usual in my GitHub.