Generative AI as Economic Agents: Optimizing Payouts through Bayes-Nash Equilibria

Discover the implications for economic systems and societal welfare.

“History calls those men the greatest who have ennobled themselves by working for the common good; experience acclaims as happiest the man who has made the greatest number of people happy.” Karl Marx (1835)

Matt is my agent whom I gave the capability to browse Arxiv and provide me with new and interesting papers to read about AI Agents. Working with Matt brings me joy and improves my life by finding interesting papers to read, thus optimizing my utility function. I.e., I become more useful to my world because I am better informed. Sometimes I consult with Matt about which paper might be more relevant to read.

Therefore, as per the definition of this paper, Matt is an economic agent.

Goals: Describe AI-based virtual consultants as economic agents and integrate them into some examples of decision- and game-theoretic scenarios.

Problem: How to give more agency to AI Agents? What are the economic benefits, and how can an agent optimize for economic benefits without being malevolent?

Solution: Develop a general framework that defines AI Consultants that operate on an economic incentive supporting a human decision-maker in a world of unequal information

Opinion: While the paper is convoluted and lacks numbers, it brings up some interesting ideas that I hope to explore further in this write-up.

Paper (1/10)

Let’s dive in.

Behavioral Game Theory is an area of research where Game Theory and Behavioral Economics intersect. In traditional economics economic agents operate within the framework of the economic system, making decisions aimed at optimizing outcomes. Commonly, economic agents wield influence over the economy through resource distribution, taxation, and legal regulations. Therefore, they have to operate in a world where not all information (modeled as dependent variables) is known. We have already observed in our Game Theory study that even though it is a non-zero-sum game, all dependent variables are known within the game. Therefore, actively influencing the dependent variables of the game to forecast the future is quite straightforward.

How can this model be extended to optimize for economic benefit in cases of imperfect information?

Optimizing for economic benefit

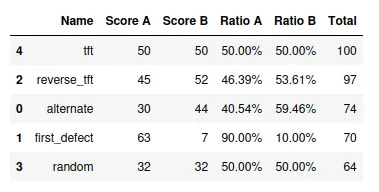

The optimal outcome in Prisoner’s Dilemma type games for both players occurs if both players collaborate and defect in alternating patterns over the time horizon of the game. Similar to the Stanford study from a few decades ago, we could show in our previous study that the optimal outcome for the state of the world (“Total”) is tit-for-tat, i.e., reciprocal collaboration.

However, both players in this scenario have complete information. Both players know the current state of the game as described through all prior moves, payouts, and current scores. This type of game is called a “complete information game”. In this type of game, the AI has full agency to execute a move. Other examples could be a game of Chess or Poker. Yet these “AI”’s exist already for a long time.

However, if you assume that the agent is tasked to find the optimal price for an AirBnB sublet in a given city, then not all information might be available, and also the economic benefits do not stay with the agent. Therefore, the research team at Microsoft argues that these agents have limited agency and that in this case, the AI Agent as an AI consultant could be the right way forward.

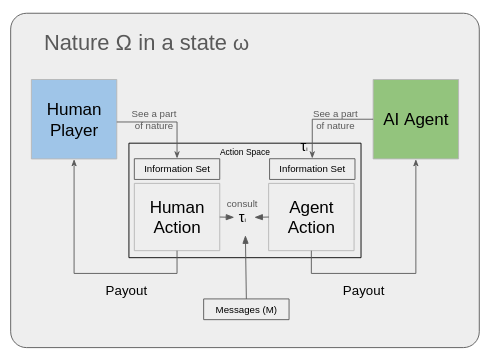

They propose the below “General Model” for the “AI Consultant” in their framework.

The General Model

The general model sees both the human player and the AI agent as part of “Nature”. Nature is a subset of a worldmodel, that can be a lot of different things — for example, a game, a stock trading app, or an AirBnB pricing model. In their model, an agent selects a specific state of the world and provides subsets of these to the human player and the agent. The human and the agent can communicate via an array of messages. This is a quite common feature in modern LLM-based chatbots that historical messages are maintained. Both the agent and the human have access to a certain set of actions. It can be assumed that their action subsets are mutually exclusive.

In this framework, the payout of the human player changes the state of “Nature”, whereas the agent’s payout does not.

Moreover, the information set of the human player differs from the information set of the agent. This kind of makes sense, if you consider consultants or co-workers in real-life settings who also have different experiences from one’s own and also can provide guidance to maximize outcomes.

This is an example of a Bayes-Nash Equilibrium

The Bayes-Nash Equilibrium

If we recall that the Nash equilibrium is a situation in a game where no player can improve their payoff by unilaterally changing their strategy, given the strategies of the other players, then we can extrapolate to the concept of the Bayesian Nash Equilibrium (BNE) which extends the Nash equilibrium to states with incomplete information.