How To Build a Car That Drives Itself

The System Architecture of Autonomous Vehicles (Core Modules)

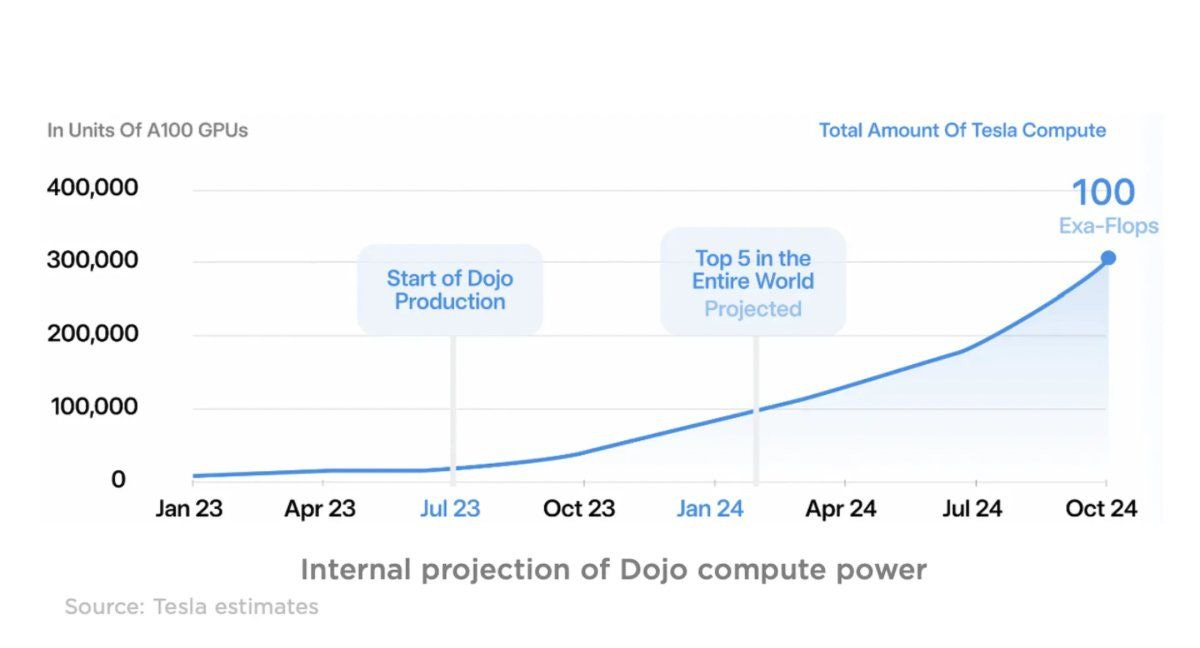

Tesla’s new 300 Million AI cluster is about to go live right about today. 10,000 Nvidia H100 GPUs will bring Tesla’s capabilities to train for full self-driving technology to a whole new level.

source:https://cdn.mos.cms.futurecdn.net/iBCinTtG3e6G9MHcfwJcXU-1200-80.jpeg

The advancement of autonomous driving technology has sparked new life into one of the oldest industries in the world. Clearly, I am talking about the transportation of goods here.

Waymo (Google), Tesla, and Cruise(GM) promise us a future where vehicles can navigate and operate without human intervention. In this post, I will dive into the intricate architecture of the Autonomous Driving System (ADS) as defined by my good friends from the Autonomous Vehicle Computing Consortium Group (AVCC)

If you enjoy this post, kindly like and subscribe.

In this post we will be exploring the components needed to build a car that drives itself.

Core Components of ADS

The ADS architecture comprises 11 core modules of interconnected components, each performing specific core functions that collectively enable the vehicle to navigate safely and efficiently. There are important adjacent components, like security, but for this post, I will be focusing entirely on the car’s core operations.

Similar to a human being driving a car, an autonomous agent needs to have access to a variety of “senses” to experience the world around it.

Perception: These “eyes” of the car rely heavily on utilizing an array of sensors such as LiDAR, radar, cameras, and ultrasonic devices in combination with computer vision technology. These eyes are usually hardware products that are procured by Velodyne Lidar, Luminar, and others.

Velodyne Lidar: Puck, Ultra Puck, Alpha Prime

Luminar Technologies: Iris

Innoviz Technologies: InnovizOne

Quanergy Systems: M8

Hesai Technology: AT128

If you planning on building an autonomous car make sure your vehicle is equipped with a range of cameras (regular, thermal, lidar, radar) that provide the algorithms with different perspectives (short-range to wide-range) of the environment.

In this phase, the vehicle’s cameras collect real-time data about its surroundings.

The data these cameras have collected is then processed using advanced algorithms for Object Detection, i.e., to detect and track the infrastructure like road signs. Object Identification, i.e., identify road signs and their meaning, pedestrians, animals, trees, etc.