Memory and Knowledge Management for Intelligent Agents: Enhancing AI Performance

Helping a stateless agent navigating your apartment searching for your keys.

Imagine you want to go to work, and you don’t remember where your car keys are. Only to realize that you have to search your whole apartment because you also don’t remember what your apartment looks like.

How do you find your keys?

As in many cases, it is not the problem per se, but how you define it. In this case, what if we model the key search as a decision-making process in uncertain environments where the agent cannot directly observe the full state of the system?

I.e., for every room you find yourself in, you will have to check if the keys are there.

Entrance (E)

Living Room (LR)

Kitchen (K)

Hallway (H)

Bedroom 1 (B1)

Bedroom 2 (B2)

Bathroom (BA)

One way to this is to define the problem space as a partially observable Markov decision processes (POMDP) environment you or your cognitive agent can engage with.

A POMDP can roughly be defined like this

States (S): The set of all possible states of the environment. I.e., where in which room are you? What time is it? What else is in the room? Which doors can open, etc.

Actions (A): The set of actions the agent can take. I.e., open doors, turn on lights, etc.

Observations (O): The set of possible observations the agent can receive from the environment. "I hear the faint hum of a refrigerator.", “The clock on the wall shows 2:30 PM."

And a selection of functions that guide the exploration through the environment

Transition function (T): T( s' | s, a ) represents the probability of transitioning to state s' given the current state s and action a.

Observation function (O): O(o|s', a) represents the probability of observing o given that the agent took action a and ended up in state s'.

Reward function (R): R(s, a) represents the immediate reward received for taking action a in state s.

Discount factor (γ): A value between 0 and 1 that determines the importance of future rewards.

And finally, some ground rules of the environment

Initial belief state (b0): The initial probability distribution over possible states. You wake up in your bed and want to search for the key. At this point, it can be argued that it is equally likely that the keys are in each room in your apartment

Horizon (H): The number of time-steps the process continues, which can be finite or infinite. How many times can you search the whole place before you have missed going to work?

Policy (π): A function that maps belief states to actions, determining the agent's behavior. The policy adjusts based on your “lived experiences” in finding the keys.

Memory

Memory comes in many different sizes and different architectures. Here presented in reverse temporal order

External Memory: A digital library you go to read up on the Napoleonic Wars,

Long-term memory that stores specific experiences or events (Episodic) or general knowledge and facts about the world (Semantic), or

Short-term memory which includes the current state of the world. Sometimes referred to as “working memory”, “session”, or “chat history”.

Sensory memory briefly stores vast amounts of sensory data before it’s selectively filtered into conscious awareness as working memory

For our cognitive agent, the important memory for operations is the short-term memory which is traditionally either semantic or episodical in nature. However, most agents still struggle with accurately retrieving memories. Also, the precise regulation and optimization of memory processes is still unclear.

Cognitive agents, if we consider tool use for example (Serpapi) or RAG on other external data storage have convinced me that they can manage long-term memory more effectively and thus are potentially better equipped to handle complex tasks that require recalling past information, adapting to new situations, and making informed decisions based on incomplete data than reliably working with short-term memory.

Why is that?

Managing Short Term memory

A new memory is created once our sensors observe something new. A new sight, a new smell, or a new texture. As we have learned that this sensor memory is fleeting, we need to hand it over to the short-term memory. This is because short-term memory provides the right context to the attention model.

In other words, once we have created a new short-term memory from our sensors, what are we going to do with it?

Will we Move the short-term memory

to episodic memory, or

to semantic memory.

Episodic vs Semantic

Both episodic and semantic memories are part of the long-term memory of our agent. Episodic memory pertains to the recollection of specific events and experiences that are tied to particular times and places. It involves personal memories that include contextual details about when and where they occurred.

Semantic memory, on the other hand, deals with general world knowledge and facts that are not linked to specific occurrences. For example, knowing that Paris is the capital of France is a semantic memory, whereas recalling a visit to the Eiffel Tower involves episodic memory.

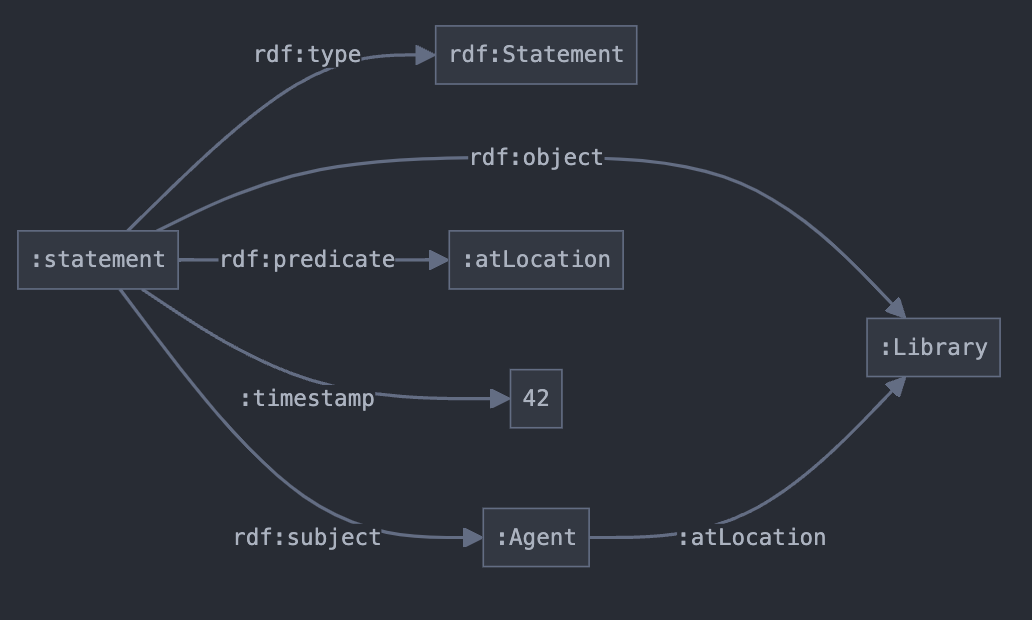

Once we have decided on if this one specific short-term memory from your chat history is worth remembering, then how will we go about encoding, storing, and retrieving it? To computationally model memory for our agents we need to add relation qualifier key-value pairs and apply this to the semantic web concept of RDF (Resource Description Framework) triples. Thereby making them RDF quadruples.

For example, given a memory triple (Agent, atLocation, Library), they would turn this into something like (Agent, atLocation, Library, {timestamp: 42}), and (Agent, atLocation, Library, {strength: 2}), to turn them into episodic and semantic memories, respectively.

This knowledge-graph gets even more powerful if it can be searched through knowledge-graph embedding that guides us in storing and retrieving memories. If we can codify memory events into knowledge graph structures. Would that also work for defining the right memory management policy?

I believe that the best agent is one that repurposes the given learning objectives as learning a memory management policy, allowing itself to capture the most likely hidden state, which is not only interpretable but also reusable.

Memory and KGs

We have learned that we can define an environment for search in the form of a knowledge graph.

We have also learned that it could be possible to define the environment completely knowledge graph (KG), where the hidden states are dynamic KGs. In a standard POMDP, states are often represented as vectors or discrete values. What if, each hidden state is itself a complete knowledge graph instead? While this would be a substantially more complex representation, it would be one that be significantly more flexible able to capture rich, structured information about the environment. An additional benefit of doing it in this way is that this KG is both human- and machine-readable, thus making it easy to see what the agents experiences.

This also makes the experiences our agent remember and forget while navigating the environment. This approach can be used to work as a form of memory.

Taking advantage of belief states can help solve POMDP problems.

The environment starts in an initial state distribution capturing the likelihood that the key can be found in this room.

In the beginning we don’t know anything, that’s why the likelihood that the key can be found in either of the rooms.

Initial Belief State:

{E: 0.143, LR:0.143, K: 0.143, H: 0.143, B1: 0.143, B2: 0.143, BA: 0.143}

Then the agent can move through the states and adjust the probabilities accordingly. Agent takes action: Move to Living Room

New Observation: (Living Room, {Time: 06:32 AM}, No Keys) to be stored in episodic memory.

Updated Belief State:

{E: 0.0, LR: 0.167, K: 0.167, H: 0.167, B1: 0.167, B2: 0.167, BA: 0.167}

At every time step, the agent maintains a state of the world, which is a probability distribution over the state space. The agent then chooses the next best action at ∈ A based on its current state according to its policy. It is naively clear that the agent selects the next best room as one that has the highest probability and is nearby. Or in other words, it is unlikely that the agent would return to the entrance from the living room because the probability that the keys are there is 0 since we already searched there although the room is nearby.