Building a Multi-Agent DeepResearch for Investing with OpenAI SDK

When the Framework Works but the Agent Doesn't: OpenAI's Financial Research Struggles

Earlier this month, OpenAI launched a new SDK for building agents. This follows my assumption that all large model providers will also offer efficient and embedded agent frameworks.

The initial verdict— as one would expect. It’s nice. OpenAI’s framework is a solid piece of engineering. Which leads me to thinking, why would I use Langchain/graph, LlamaIndex, or CrewAI when I can just use this?

And it works out of the box? Probably my main concern would be vendor lock-in. But besides that, if I could replace the AI with a generic call to another LLM, it would be pretty much perfect. But I digress.

Let’s focus first on what makes this framework so good.

OpenAI defines agents as system that works independently to perform tasks (to act) on the user’s behalf.

Agents SDK is the next product iteration of the Swarm research project, slowly pushing agent tech to a production-ready technology readiness level.

What makes the SDK better than swarms is that the agents can now be orchestrated through handoffs, i.e., tools for transferring control between agents. And, now agent workflows also support tracing, allowing you to view, debug, and optimize your agent ops.

But how does it work in detail? And more importantly.

Is it reliable?

Let’s dive right in.

Setup

I suppose if you already have it installed, you can skip this step, but we never know.

So here you go.

You need to clone the GitHub repo, then you go into the folder and create a virtual environment.

git clone https://github.com/openai/openai-agents-python.git

cd openai-agents-python/

python3.11 -m venv venv

source venv/bin/activateOpenAI uses venv. It’s a package manager that I also use and like. I used Python 3.11. Then you activate the virtual Python environment with the “source” statement.

pip install open-ai-agentsThen OpenAI recommends installing the agents package as shown above. Since I want to use a pre-built example for this exercise, it makes sense to have the code available. There is a reason. Before we can run the example, we need to adjust a script called “financial_research_agent.py” that you can find in the subfolder “examples”. Here we need to hand over your OpenAI API key.

import os

os.environ["OPENAI_API_KEY"] = "<YOUR KEY>"Once this is done, you could theoretically run it with this statement.

python3.11 -m ./examples/financial_research_agent.mainBut why would you do it? You don’t know what the agent does yet!

Financial Research Agent (FRA)

In a nutshell, the FRA orchestrates a light process for financial research. From planning searches to generating and verifying a final financial report, the agent utilizes a series of AI agents specialized in different tasks, such as financial analysis, risk assessment, web searching, and report writing.

DeepSearch has become increasingly popular recently, and my own older work on the topic still works. So we need to understand what this agent, or better this multi-agent, does as it creates a research analysis for a given query.

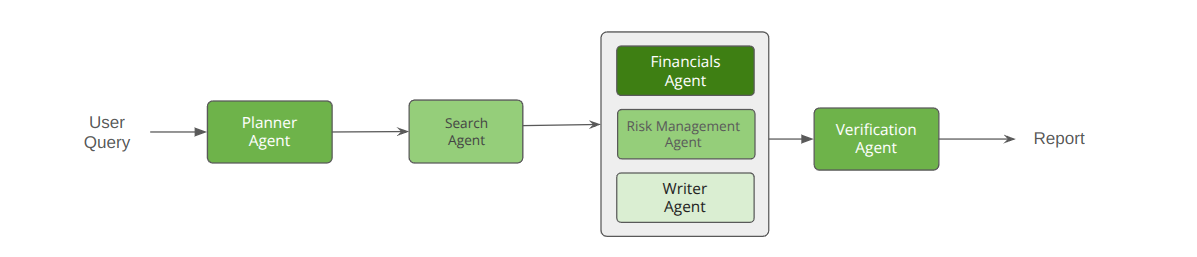

As you can see, the overall architecture of the agent is quite basic.

The user provides a search query to the agent, and then the Planner Agent creates a structured search plan that can help find a solution for it. The Search Agent then executes the Search and hands over the result to a group of specialized sub-agents. Then we have a verification judge at the end to check if the provided report is good or not.

For all of the agents, system prompts are extremely simple.

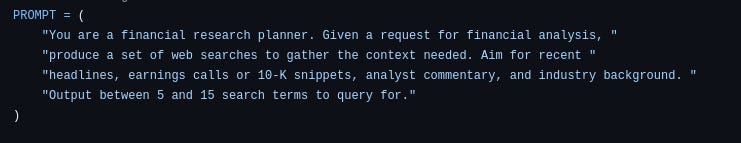

Here is an example for the planner agent:

Which doesn’t mean it has to be ineffective. I doubt, however, that the partial prompt “headlines, earnings calls, or 10-K snippets[…]” will be impactful down the line. And this opinion was formed on several years of actual agent ops work experience building such systems in production.

The agent is not only the prompt; it needs to be instantiated.

This is done like this:

planner_agent = Agent(

name="FinancialPlannerAgent",

instructions=PROMPT,

model="o3-mini",

output_type=FinancialSearchPlan,

)Here, the name is provided for tracing and orchestration. A system prompt is handed over to define the role and personality the agent should have.

And “FinancialSearchPlan” is a simple Pydantic BaseModel that helps with response reliability control. Once the search plan is written, the Search Agent then begins to crawl by executing the searches in sequential order and gathering information. Important to note is that this agent uses the recently launched Websearch Tool and not Tavily, DDG, or Serpapi.

Then the raw search results are handed over to the Writer Agent. I suppose that OpenAI’s web search tool already has a predefined format to return the search results that make it easy for the writer agent to compose the report.

The workflow orchestration is really interesting here, though.

Both the risk agent and the financial agents are instantiated as tools.

Instantiating agents as tools is different from handoffs in two ways:

In handoffs, the new agent receives the conversation history. As a tool, the new agent receives generated input.

In handoffs, the new agent takes over the conversation. As a tool, the conversation is continued by the original agent.

In code that looks like this: