Paper Review | Microsoft's Interactive Agent Foundation Model: Advancing AI Across Robotics, Gaming, and Healthcare

Even OpenAI acknowledges that agent tech will be a hot topic in 2024

Most AI implementations still focus on RAG, which makes intuitive sense since RAG is a data problem that forms the foundation for agents. Agents, in my opinion, though, is the more interesting technology. Now Microsoft Research follows up by introducing an Interactive Agent Foundation model.

Goal: Define a framework for agents to generalize well across domains and modalities.

Problem: Current agent frameworks (Crew ai, BabyAGI, AutoGPT, or BCG Agentkit) are built on the notion of tasks, they don’t support multi-modality, some don’t have access to external tools, and follow only a limited memory strategy.

Solution: The project team proposes a multi-domain, multi-model approach based on a unified framework of pre-trained models for handling text, visual data, and actions.

The agent has a better world model and can take action based on reasoning, telemetry, and appropriate feedback loops.

The datasets used for the pre-training come from

Robotics: human-machine manipulation based on physical world interactions (not unlike the Franka Emika dataset we have seen before;

Gaming: human-machine embodiment in virtual reality — similar to the GTA6 dataset;

Healthcare: augmented human-machine interaction in traditional multimodal tasks. Has some lateral knowledge transfer I guess.

Opinion

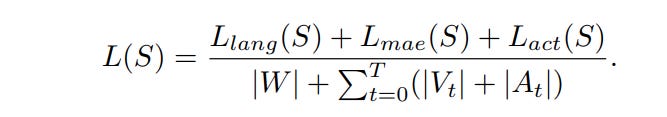

The team defines separate loss functions for each input stream. intuitively, that doesn’t make much sense to me but seems to work.

Having the agent focus on embodiments helps with generalizations for robotic operations. That would be really cool if it does work. I don’t think that the dataset in itself works well, and being detached from the physical embodiment feels like building a framework for a body that doesn’t exist.

Links: Paper, GitHub (coming soon…I guess)

Kind note to my paying readers (thank you for your support!). I put links to the datasets behind a paywall at the bottom. Hope that adds value to you.

Let me know if not.

Introduction

The research team at Microsoft is proposing a framework for AI Agents that is largely focused on gaming, healthcare, and robotics. So far so good. These are huge markets that can benefit from this kind of automation. The problem that many such approaches have faced is that LLMs can be non-deterministic and start to hallucinate which in the realm of trajectories can have dire physical consequences.

Microsoft's research team aims to solve this problem by grounding their agent-based AI in a deeper understanding of their environmental context. The basic assumption here is that they know that hallucinations are the result of incomplete world models.

Definitions

Embodied agent: Capable of interacting with the environment and other agents (in the environment). Are expected to perform tasks in virtual reality (games) and the physical world (robotics, healthcare).

Perception: Enables the agent to learn from diverse data sources, such as images, videos, text, and speech, and to fuse them into a coherent representation of the world

Planning: The process of generating and selecting actions to achieve a desired goal or outcome. Planning is important for long-range tasks, such as navigating in a robotics environment.

Interaction: Means of exchanging information and influencing behavior between agents and their environment. In many cases, tasks require multiple rounds of interactions between AI and humans or the environment.

Note to self: For the investment agent that you are working on, the environment is rapidly changing based on market sentiment and available information. Some information coming from the environment is more important than others. The concept of noise-to-signal ratio is important.

Collaborative system: A system that consists of multiple agents that work together to achieve a common goal or objective

Big Picture Idea

What Microsoft wants to unlock through the proposed framework is that agents become capable of autonomously taking suitable and seamless action based on sensory input, whether in the physical world or a virtual or mixed-reality environment representing the physical world.

But it’s not as straightforward as this may seem.

Challenges

The real and most virtual world(s) is a largely unstructured environment, where visual impurities affect both high-level and low-level actions of the embodied agent given the same goal instruction;

These worlds also allow for open sets of objects that require the agent’s decision-making module to use common sense knowledge rather than hardcoding it manually.

We humans are masters of natural language interactions. Therefore our interactions with the environment where the agent operates also require the agent to understand and operate on more than just template-based commands. For example in real-world business settings, we set goals, constraints, and plans and express them in everyday language.

If our agents should reach the same level of understanding it would unlock a more comprehensive approach to these complex challenges. The project team approaches these challenges by using a variety of specialized datasets.

Datasets

These datasets were sourced through the robotics, gaming, and healthcare domains on the topic of traditional LLM training methods. I.e., Internet-sourced textual data.

For the robotics dataset, the research team tested the model on language-guided manipulation tasks. The team used the Language-Table (Lynch et al., 2023) and also the CALVIN (Mees et al., 2022) datasets. Both datasets combine real-world instructions with robot trajectories. For example, in the Lynch dataset, a robot gripper was tasked to rearrange tabletop objects following language commands.

For all robotics data, the team also included special action tokens to indicate the end of a trajectory. For example, in Google’s Language Table, the team included 21 binned actions for each of the x and y directions, representing the end effector translation target. The team also included 21 binned state tokens representing the current end-effector translation for each of the x and y directions, and an equal number of state tokens representing the previous robot action.

The gaming dataset however consists largely of Minecraft demonstrations collected by contractors. Not totally surprising since Minecraft is now a Microsoft game that defines a virtual world with quite straightforward, not too sure if I would call it simple, rules. The team also used gameplay from Bleeding Edge, a team-based multiplayer game, but tbh, I can’t talk too much about that game. I copied a link in. Feels like Fortnite to me.

Now for the final and most interesting dataset (‘healthcare’), the team used real-world recorded scenes from hospital intensive care unit (ICU) rooms using wall-mounted RGB cameras. The dataset was labeled by experienced ICU nurses who generated captions of extracted 5- 10-second video clips depicting common nursing activities in the ICU. The team then used Richmond Agitation-Sedation Scale (RASS) score to assess the patient’s state of agitation and sedation. Wild stuff.

Model development approach

I really enjoyed how the team explained in detail how they developed their model.

To quote them directly from the paper :

We used a linear warmup cosine learning rate scheduler, with an initial learning rate of 0.0001. We initialized the vision component of our model with the CLIP base model with patch size 16, and initialized the language and action components with OPT-125M. We used 12 nodes of 16 V100 GPUs for 175 hours for all of our pre-training.

What is all of this stuff?

Linear Warmup Cosine Learning Rate Scheduler:

The learning rate scheduler determines how the learning rate changes during training. Initially, the learning rate is set to 0.0001 (or 1e-4) and increased linearly for a certain number of updates (or epochs). This warmup phase helps stabilize the training process by gradually adjusting the learning rate from a small value to its optimal value.

After the warmup phase, the learning rate follows a cosine schedule. It decreases smoothly over time, resembling a cosine curve. This approach helps the model converge more effectively.

In other words, the learning rate starts low, increases linearly during warmup, and then decreases in a cosine-like manner.

Model Initialization:

The vision component of the model is initialized using the CLIP base model with a patch size of 16. CLIP is a model that combines vision and language understanding.

Neat.

What does success feel like?

As we have seen in the graphic above, the team uses an action-, a visual-, and a language decoder to translate the training data into the model. The team used a joint encoder for the purpose that has the following benefits

it allows for the use of action, image, and video with language datasets for pre-training.

It increases the capabilities of the model across a variety of downstream tasks (e.g., video understanding, temporal reasoning, action prediction, interaction with human feedback, etc.).

It reduces the overall model size

As usual, I am not commenting on the metrics they have used. However, I think that the team is correct in commenting that by pre-training on a mixture of robotics and gaming data, their model is effective. I would also concur that their foundational model, being trained on a variety of data has developed visual, language, and agent capabilities that may lead to a powerful and general-purpose tool that can execute a variety of interactive tasks.

Second personal side note. What makes me proud is seeing that even industry-leading experts from Microsoft see the value in using robotic sequences and gameplay data on top of textual information as valuable for the development of their agents.

In general, it is a cool research project that I hope will lead to significant improvements in the near future.

While I thought their choice of data was a bit weird, I hope that it helps them develop a better model.

https://www.microsoft.com/en-us/research/uploads/prod/2023/12/EmergentAgentAI-657efcb33d5b4-2048x1432.png