Paper Review | External Reasoning

Towards Multi-Large-Language-Models Interchangeable Assistance with Human Feedback

The more I explore working relationships with autonomous agents, the more I realize the importance of memory management and improved reasoning through more efficient context windows and access to external knowledge. It was with great pleasure that I noticed this paper from a student at the University of Adelaide which seems to research in a similar direction.

Project Goal: How can we improve the reasoning capabilities of agents when using external sources?

Problem: LLMs are trained on a historic body of knowledge and don’t have an easy way to integrate new knowledge. Agents are still limited in their reasoning capability, how can we improve their capabilities?

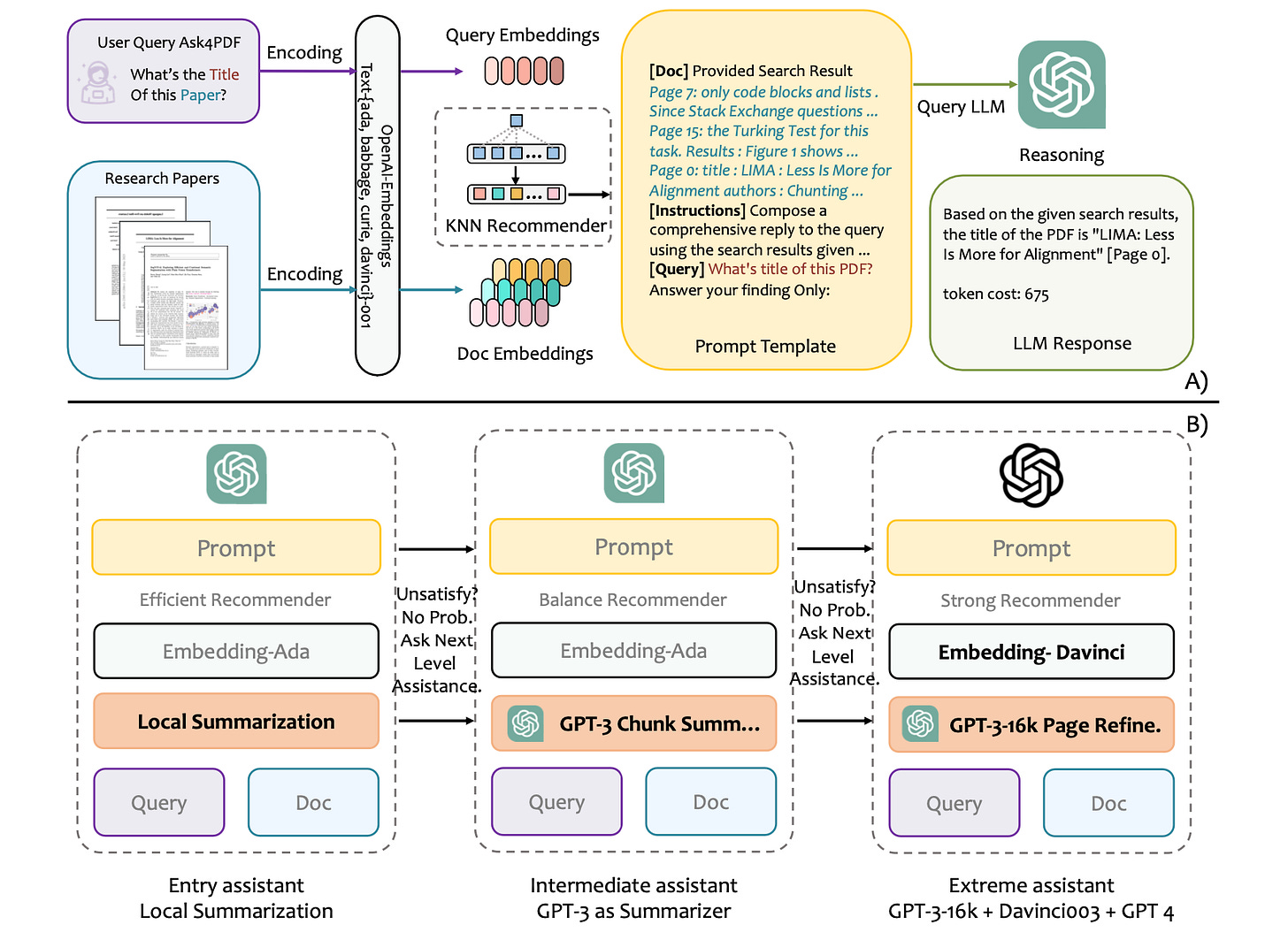

Proposed Solution: This paper proposes a three-tier approach consisting of efficient embedding of research papers using OpenAI’s embeddings, prompt templates, and a human-feedback-based escalation procedure.

Opinion: The paper spends a long time setting up RAG like system process and does not go into a deeper exploration of the memory algorithms. However, I think that the approach to prompt templates is quite innovative and combined with the contextual document search is worthwhile to explore further. Personally, I am more interested in systems that ask questions to humans, but that bear no impact on the quality of the paper.

Please subscribe or leave a like for more content like this

Well, let’s dive in.