Paper Review | RoboAgent

Generalization and Efficiency in Robot Manipulation via Semantic Augmentations and Action Chunking

Introduction

RoboAgent is a research project by Meta and Carnegie Mellon University.

Why is it interesting? RoboAgent claims to have beaten Google Deepmind Robotics RT1 and performed on the same level as RT2. Big if True.

Project Goal: Achieve Generalization - Train a single robot so it can manipulate arbitrary objects in a variety of settings

Example: Telling a robot to “make tea” should not require a programmer to train the robot to (1) identify a tea bag, (2) pick it up from the cupboard, and (3) put it in a cup with hot water as separate steps, but the robot “gets it” automatically in a variety of settings.

Problem: Acquiring training data is expensive. Existing data is proprietary and data capture through specialized Frank Emika machines is a slow and manual process. Visual Imitation based learning approaches, i.e., learning on video data, overfit to specific scenes and locations and therefore don’t generalize well.

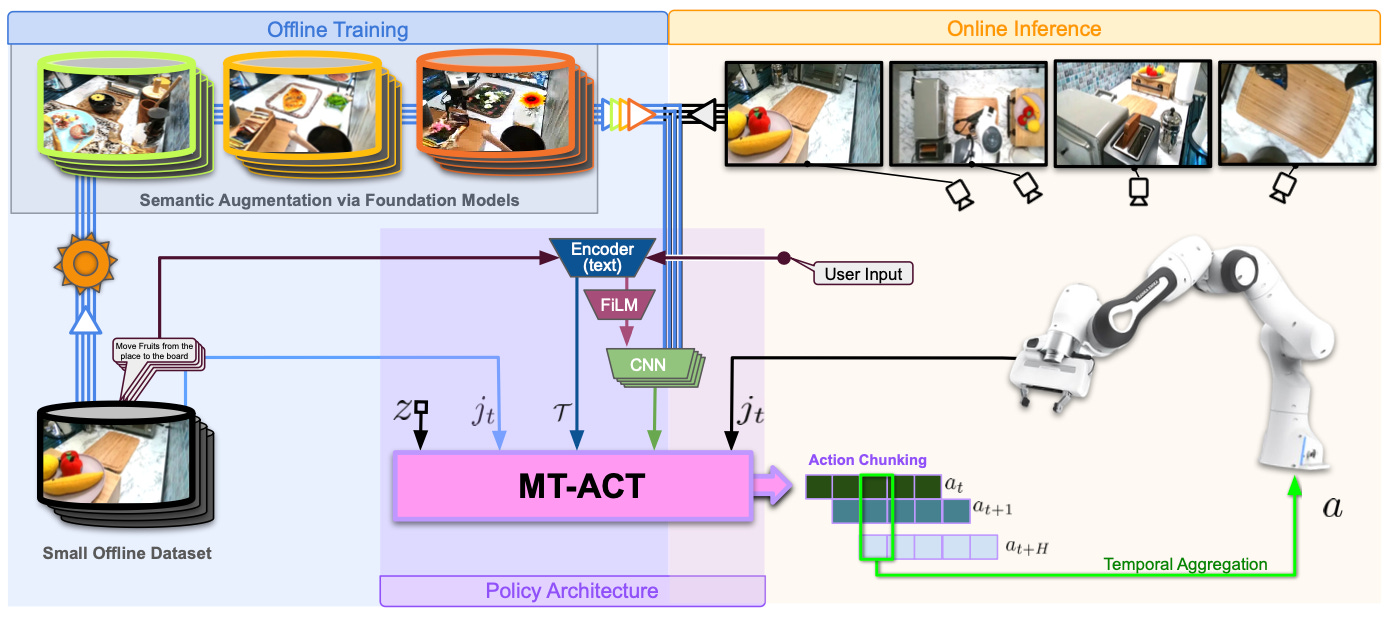

Proposed Solution: A framework (MT-ACT) consisting of (a) semantic augmentations, (b) action representations, and (c) action commands for training universal agents to be capable of multi-tasking diverse skills

Opinion: RoboAgent might look not as shiny as Boston Dynamic’s demos, but it's an actual improvement of what's possible. (9/10)

Links to the RoboAgent paper, site, GitHub (upcoming), and trajectory data

Let’s dive in by explaining some terms.