GPTQ Algorithm: Optimizing Large Language Models for Efficient Deployment

When it comes to quantization, compression is all you need.

Introduction

Have you been to Huggingface lately and seen models with the extensions GGUF, AWQ, or GPTQ and wondered what they do?

Or

You have been working on a mobile AI agent, wanted to perform inference using a pre-trained LLM on a phone locally, and then had to realize that your app’s performance is terrible.

Then you might be interested in learning more about GPTQ and model quantization.

The problem practitioners like us are facing is that models like GPT3 require multiple GPUs to operate because only the parameters of a standard GPT3-175B will occupy 326GB (counting in multiples of 1024) of memory when stored in a compact float16 format. With the GPTQ algorithm it is possible to reduce the bitwidth down to 3 to 4 bits per weight without negligible accuracy degradation through a process is called quantization. Thereby effectively shrinking the model's size, making it more efficient and easier to use.

Quantization

As mentioned, quantization is a process that involves reducing the number of bits used to represent each weight in the model.

Wait what weights?

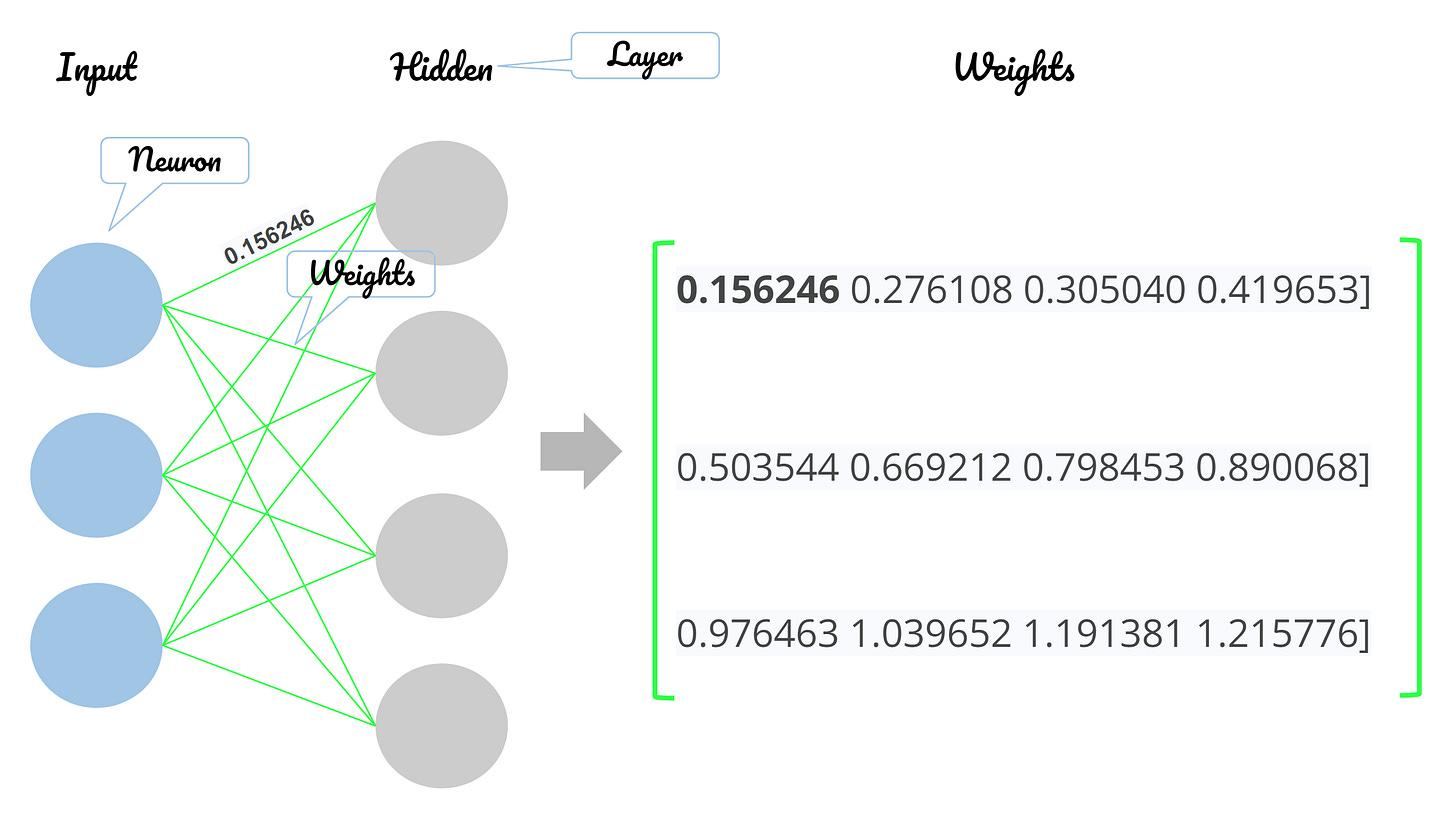

To quickly recap. Neural Networks are built of layers (Input, Hidden, Output), consisting of neurons, and weights that connect those neurons. For this example, I only focus on the input to the hidden layer and not architectures with several hidden layers and output layers.

Anyways. Our weights can be represented in a matrix from weight[1,1] to weight [n,m].

When during training we calculated our weights, we commonly use a compact float16 or float32 datatype to maximize precision.