DeepMind's RT-X: Revolutionizing Robot Learning with Open X-Embodiment

Let's blend our skills and stir up success as a team smoothie!

While OpenAI and Microsoft have been thriving over the last months it might have appeared to the layman that Google and DeepMind have been lagging. As we can see now with the announcement of RT-X, it might be clear that we might not see the full picture of what Google is working on and with whom.

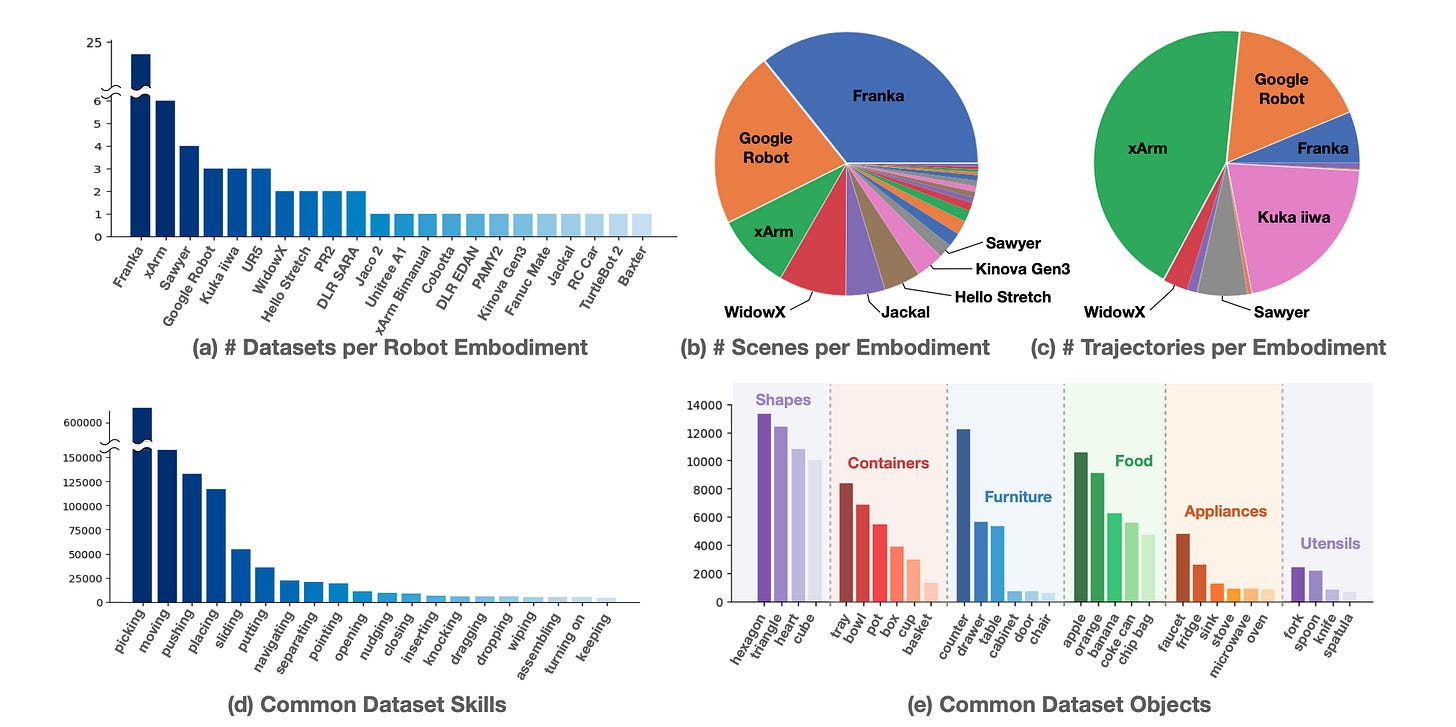

In the case of X-embodiments, it is a selection of high-grade universities that have joined forces to build one of the most ambitious and amazing datasets for Robotics research worldwide.

So the question of what is RT-X arises.

Introduction

Goal Is there a way to train robots more effectively, allowing them to generalize better and more quickly learn new capabilities.

Is it possible to

demonstrate that robots trained on data from a variety of robots and environments can be positively transferred, creating better performance than policies trained only on data from each individual setup.

define datasets, data formats and models for the robotics community to enable future research on X-embodiment models.

Problem Historically, programming robots is a time-consuming and specialized task where changing a single variable often requires starting from scratch significantly increasing development times. Then researchers started to train robots with computer vision. That unearthed another problem, While computer vision and NLP can leverage large datasets sourced from the web, comparably large and broad datasets for robotic interaction are hard to come by.

Solution The research team developed a new model, RT-X, that improved over policies trained only on X-embodiment data, without any mechanisms to reduce the embodiment gap, and exhibited better generalization and new capabilities.

Let’s dive in