Let’s start-off 2025 with a banger. We all know that Agents are the future, one of the reasons why we are here. The next decade will be insane — you will not want to miss this!

Here are my Top 10 of interesting CES announcements from Nvidia.

Over the next few years, cognitive reasoning agents will achieve human-like skill level in terms of reliability and accuracy. Therefore, there will be agents that have access to domain-specific types of information inside our company with Access Control are able to reason about their task tasks, collaborate human/agent and agent/agent kicking off a productivity increase not seen since the invention of the steam engine. Jensen Huang, CEO of Nvidia, made some paradigm setting remarks at CES this year that especially will be interesting for Enterprise customers, but I think smaller companies alike.

Watch his keynote here:

The Next Phase of GenAI is AI Agents

In his already now seminal keynote, I was really happy to hear that Jensen Huang emphasized that the next major leap is the transition to agentic AI. As we all know, unlike traditional narrow AI, which focused traditionally on understanding data, or GenAI, which generates new content, agentic AI is about AIs that can plan, reason, and act (!) independently. Our new co-workers will be able perceive their environment, reason about it, plan actions, and execute them autonomously. While this decreases human intervention it will ultimately increase the need for governance and monitoring. So, in a nutshell, we will all be middle managers in 5 years.

Three Scaling Laws

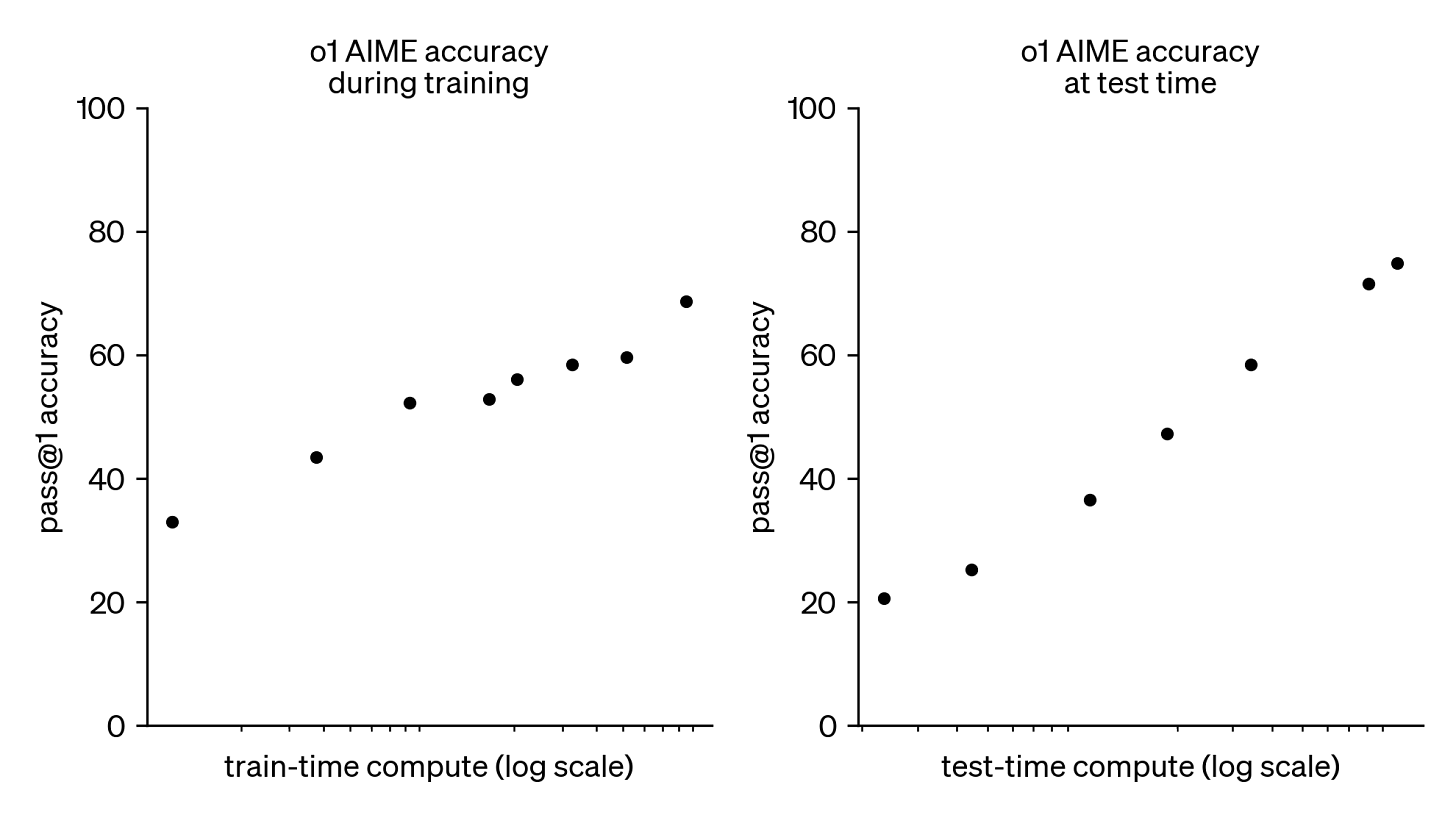

He also talked about three key scaling laws framework that underpin the development of agentic AI: Pre-training which focuses on creating general knowledge high-quality models by training on Internet-size datasets. Post-training where domain-certified experts fine-tune models for specific use-cases in their respective industries. Test-time scaling, the third law in the framework stands out as a new addition specifically for agentic AI. This technique allowing models to spend more time finding the right answer by dynamically allocate computational resources for more complex tasks during operation. This increases the chance that agents can handle varying degrees of complexity efficiently without needing constant retraining. Together, these three laws build robust framework for developing adaptable and intelligent agents capable of solving real-world problems.

source

A Blueprint for Enterprise Users

Agents are particularly difficult to implement for enterprise users as they are usually held back by effective cost control & monitoring measures, data privacy & security concerns, and also performance consistency. The latter being specifically audit relevant. To democratize the development of AI agents, NVIDIA introduced open-source blueprints for enterprises. Collaborating with agent framework leaders like Weights & Biases, CrewAI, Langchain, LlamaIndex, and Pipecat (? — never heard of them), they designed a comprehensive toolkit aimed to simplify AI agent integration. An example with LangChain is integrating Llama 3.3 70B NVIDIA NIM microservices, enabling users to define a topic and outline to guide an agent in retrieving web-based information. The agent then delivers a report in the specified format. Fair enough, I suppose. On paper, these blueprints shall support automating repetitive tasks, enhancing decision-making processes, and scaling AI adoption across industries. Although that makes one wonder how many repetitive tasks companies still experience after two decades of digital transformation — especially post-covid. But hey, templates will help companies execute faster and therefore reduces cost and increases adoption. So this might be a win for all of us who are working in agents.

Nemotron

NVIDIA revealed its Nemotron model families, explicitly designed for advanced agentic AI use cases. I had in the past worked with NeMo and generally liked their performance.

These new models excel in tasks that require tool usage, enabling agents to interact with software and hardware systems. Nemotron models are optimized for tasks like complex problem-solving, autonomous decision-making, and interacting with multiple APIs and are expected to be adaptable for both general-purpose applications and domain-specific “vertical” enterprise requirements. I am looking forward to giving them a tour.

Agents for Video Analytics

The launch of Metropolis Agents marks a leap forward in video analytics. Built on a, yes you guessed it, blueprint, these agents combine tools and models like Cosmos, Nim, and Llama Neotron. Well, the naming convention Nemotron-Neotron is a bit confusing. Anyway, there will be agents are designed to analyze real-time video feeds for interactive searches, generate automated reports, and perform advanced monitoring. This has far-reaching applications in fields like security, urban planning, and retail analytics. For instance, these agents can identify anomalies in public spaces, provide actionable insights for city management, or track customer behavior in retail settings. Their ability to handle large-scale video data autonomously is set to revolutionize industries reliant on visual information. Since this brings up dystopian concerns, I would prefer to see solutions implemented in farming and manufacturing rather than city management.

An AI Agents PC

In my opinion, inference cost will be a key issue for running agents at scale because they need to engage an order of magnitude more often with their “brain” then traditional AI. Especially if you consider test-time scaling. they are bringing the power of AI agents to consumer-grade PCs, emphasizing compatibility with Windows systems. When we were discussing Apple’s Intelligence, the goal is to have the brainpower of GPT O3 or Opus on your phone. The desire for localized AI capabilities means reduced reliance on cloud infrastructure, enabling faster response times and better data privacy. Especially for the former, I am really curious how this plays out as Nvidia is a leader in the datacenter GPU market.

Of course, this would enable PCs to become intelligent hubs capable of performing tasks like personal assistance, content creation, and even advanced analytics locally. But that is not too far away as a concept.

Agentic AI in Robotics

Hey, I also noted this way back in the day. Agentic AI will be a cornerstone for the future of robotics. Of course I agree. Platforms like Isaac Gr00t are designed to research on an train humanoid robots using synthetic data (! - Yes) and high-fidelity simulations.

Watch more about it here: